This whole story starts with a paper that was published by researchers at UC Berkeley on August 22, 2018, demonstrating how a computer can superimpose a “digital skeleton” onto the likeness of a human being to make the person appear to be a good dancer. It sounds like fun, right?

Who among us with two left feet wouldn’t want to present a video of ourselves dancing like the next Michael Jackson? However, the fact that we’re mixing artificial intelligence with video editing and rendering software could have some pretty sinister implications if the technology is abused.

Introducing the Researchers’ Work

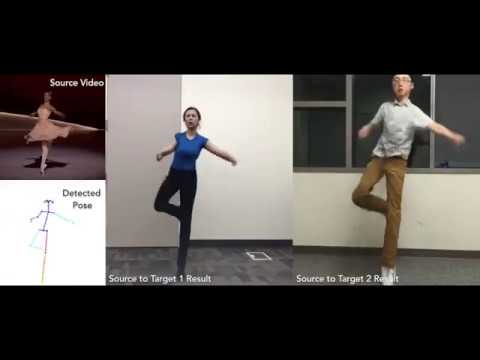

The paper published by the researchers that was mentioned earlier was basically a demonstration of how machine learning could be employed to “transfer motion between human subjects in different videos,” effectively superimposing the movements of one person onto another. You can see the results of their work here:

It looks janky and somewhat funny, but the realities we could be facing as such a technology continues to develop could prove challenging for us as a society in the future. Although we’re using the term “AI” loosely by saying that this is a demonstration of a video-editing technique that implements the concept, it certainly is one of the precursors to artificial intelligence (e.g., “imagining” someone in a situation).

Let’s Talk About Deepfakes

Use “deepfake” as a search term on YouTube, and you’ll find many work-friendly videos that present faceswaps of celebrities and some semi-convincing falsifications produced on the spot. Although this is often done in good humor, not everyone has good intentions with this software.

Deepfakes take a facial profile of an individual and super-impose it over another individual’s face. You can see where we’re going with this, and if you can’t, here it is: There are many people that use this technique to create a “revenge porn” video of others, implicating them in a very intimidating situation where they find their likeness attached to explicit content and have no recourse. It looks totally like they did whatever is going on in the video.

A more advanced overlay technology could allow computers to draw a virtual skeleton that would then allow malicious actors to superimpose an entire person into a potentially compromising situation. What we end up with is a cocktail of tools that could be used to fabricate evidence. A shooter could be made to look like someone else in crime scene footage.

What Effect Would This Have Legally?

Most countries have a legal system in place already that clearly defines falsification of events or a false witness. As far as “virtual impersonation” of an individual is concerned, using these techniques to modify a video already falls under the purview of these laws.

Where things get complicated is when it comes to courts admitting video evidence. If we have the technology to doctor videos to the extent that we do right now, imagine what we would be capable of in five years’ time. How would a court be able to consider the video submitted reliable? It’s not very hard to make a duplicate act exactly like an original. After all, it’s all digital.

Courts of law are already very reluctant to accept video evidence into their case files. This progression of technology may actually push them over the edge, to the point where they can’t trust anything that was caught on video for fear of accepting something that’s doctored.

In the end, whether we like it or not, it appears that this new sort of technology is here to stay with us, and we will have to adapt accordingly. One of the first steps we could take is to spread awareness of the fact that videos could be faked far more easily – and with far fewer resources and skill requirements – than they used to back in the 80s. Eventually, people will be more skeptical about the videos they see.

“The technology is only becoming more and more advanced … People are going to be scared. And I genuinely sympathize with them. But since the technology can’t be uninvented, we have to advance with it. I’m far more a proponent of the deepfakes algorithm itself and its potential rather than what it’s currently being used for. But then again, welcome to the Internet.”

This is what one particular Reddit user told The Verge in an article published on February 2018.

How do you think we should spread awareness? What else should we do to adapt? Let’s have this discussion in the comments!

Our latest tutorials delivered straight to your inbox